Analytics IS Part of the Feature, not Separate!

Hey I’m Ant and welcome to my newsletter where you will find practical lessons on building Products, Businesses and being a better Leader

You might have missed these recent posts:

- I asked 40 product leaders ‘What is Discovery?’ Here’s what they said

- Let's talk Dual Track: Continuous Discovery and Delivery

- Escape OKR Theatre

Analytics is part of the feature, not a separate thing.

It seems like a logical thing but it’s surprising how many companies and product teams still treat analytics like an optional extra.

Inevitably when analytics is treated separately, it gets descoped at some stage for more features. Beginning a slippery slope of shipping things that you have no way to measure their impact.

Outputs vs Outcomes

Charlie Munger said; "Show me the incentive and I'll show you the outcome.”

If your company is focused on outputs (e.g. features) then of course analytics will be an afterthought.

Afterall, analytics is more work!

I can hear the conversation already;

“Let’s do analytics later so we can make this deadline.”

“The priority is getting this out the door. Analytics is a nice-to-have.”

Now if your company is focused on outcomes it’s a different story.

You’ll be seeking answers for questions, like;

What was the impact?

Was this successful or not?

Are customers using it?

etc

This means that measurement is no longer a choice. It’s baked in.

The feature is NOT done until you’ve built the analytics and ability to measure its impact.

It’s part of your ‘Definition of Done’.

It’s NOT done until it’s measured!

Consider adding a ‘measure’ column for big features before ‘Done’

I would extend this further.

What’s more important; Shipping a feature or achieving the outcome?

Achieving the outcome, right?

Another way to frame this would be. If you shipped a feature that wasn’t performing, or worse it had a negative impact, what would you do?

You would remove it, of course! And rightly so.

Just like if one of your designs didn’t test well you would change it. You wouldn’t declare the designs done in that scenario, so why do we treat features differently?

We’re quick to mark a feature as ‘done’ as soon as it’s been shipped.

But the work isn’t done. If anything, the real test is just beginning!

In fact, I had a colleague message me earlier today to say congrats for the release that a team I’ve been coaching just did. My reply summed up my mentality here pretty well:

“Thanks. This is where the real work starts though… I'm not even thinking it's time to celebrate yet”

Now is the real test.

Real users

Real data and feedback

Finding out where your assumptions were wrong

What you missed

etc…

The problem with considering ‘shipped = done’ is it’s easy to forget about all the work that happens post release.

It’s kinda like when I have to remind my son that he’s not ‘done’ playing until he’s cleaned up the mess he just made!

“The Inconvenient Truth”

‘Shipped = Done’ contains a dangerous assumption.

It assumes that you’re going to nail it on the first go.

In fact this is one of my beefs with trying to quantify ‘value x effort’. When you start to say that this feature is going to deliver XYZ results it’s rarely the case that it will on the first try.

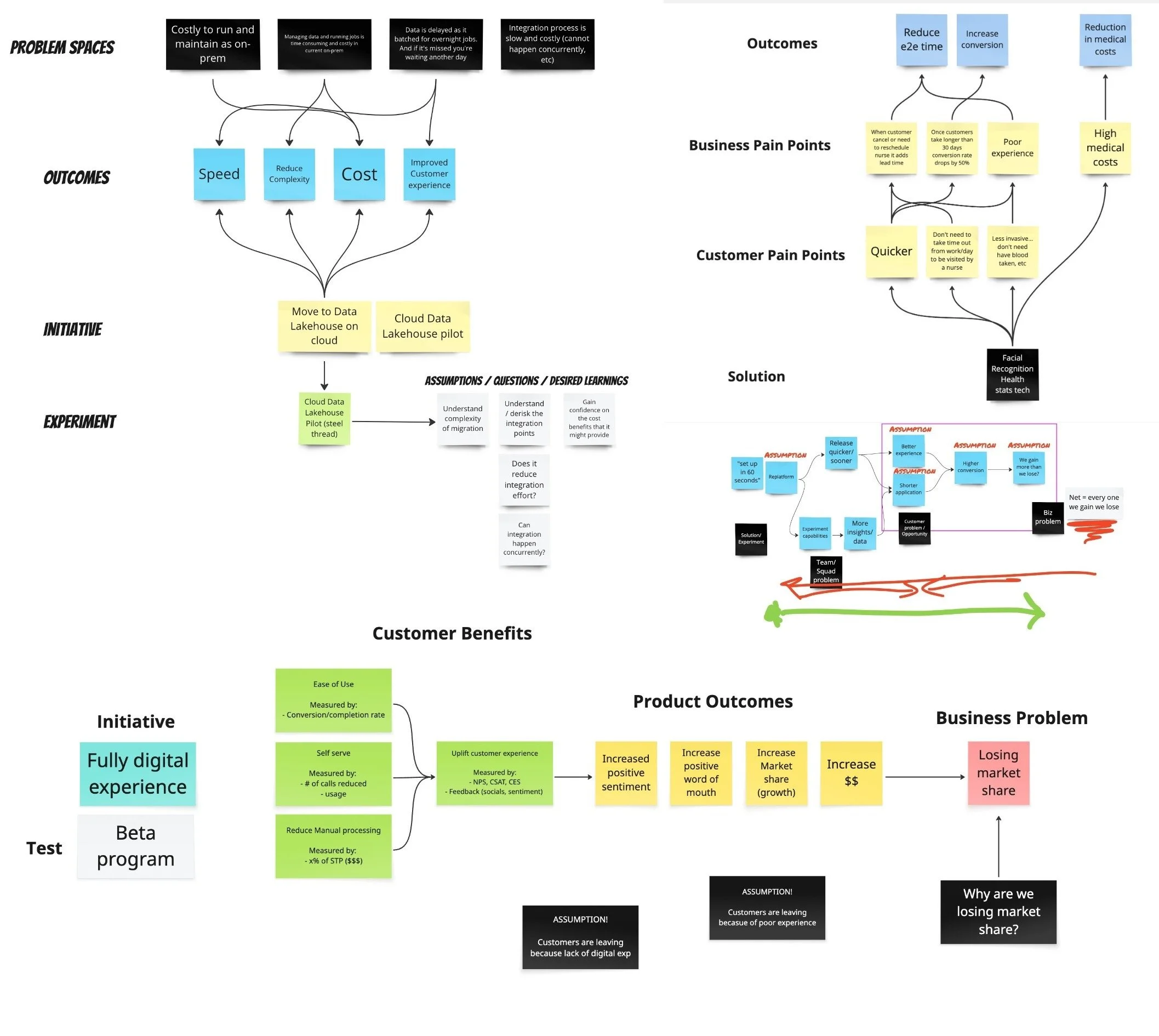

That’s why we decouple outcomes from opportunities and solutions.

It might take a few different solutions, and definitely a few different attempts at each solution to solve a particular customer opportunity. And several opportunities to realise an outcome.

Marty Cagan referred to this as one of the 'inconvenient truths about product'.

“the second inconvenient truth is that even with the ideas that do prove to be valuable, usable and feasible, it typically takes several iterations to get the implementation of this idea to the point where it actually delivers the expected business value..” - Marty Cagan

This ‘inconvenient truth’ means that you’re rarely going to nail a feature on the first go. So declaring a feature as ‘done’ on the first iteration and moving on to the next thing is completely ignoring what it takes to achieve meaningful impact and build great products.

Here’s how I think about it from a first principles perspective:

If the feature you just shipped was more important than the next feature, hence being prioritized first, then shouldn’t we remain focused on the first feature until we’ve achieved the desired impact (or pivoted away)?

Because arguably effort put into the first feature should be more important and higher leverage than effort put into anything else. Unless there was another reason why it was prioritised first?

Move on too quickly and you’re leaving outcomes on the table.

Or at least don't fill your bucket up with the next feature. Keep capacity to iterate and improve. Too often we go from 100% on Feature X to 100% on Feature Y.

Going from feature to feature will leave you always “one more feature” away from your desired outcome.

Anyways… that’s a long winded way to say ‘analytics is part of the feature’ not a separate thing 😅

Perhaps more philosophical but it’s square on the conversations I’ve been having with my clients of late.

But I hope that was valuable nonetheless to step down into some first principles and how I think about building great products!

🙌

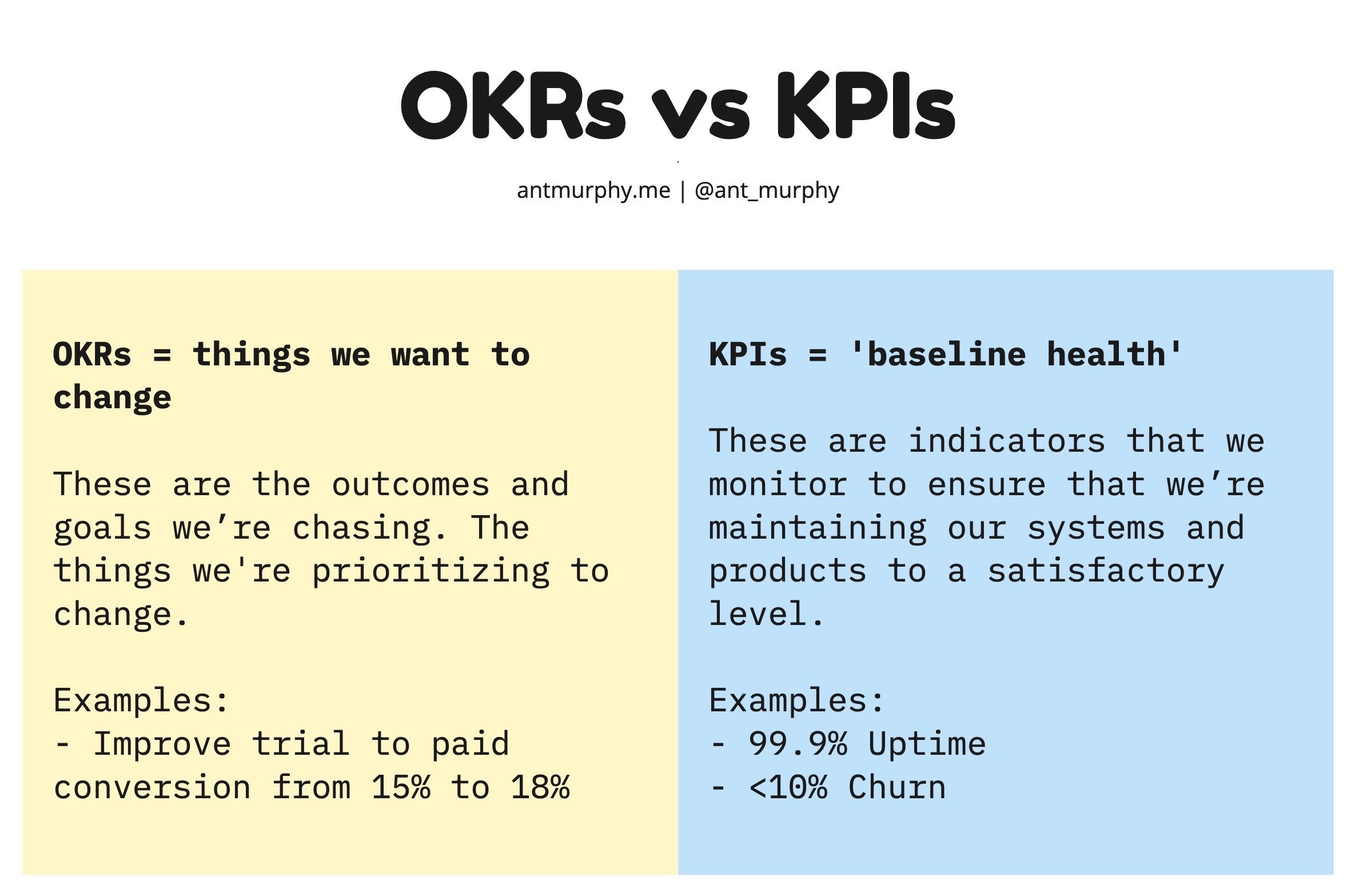

Your OKRs don’t live in a vacuum.

Yet this is exactly how I see many organizations treat their OKRs.

They jump on the bandwagon and create OKRs void of any context.

Here’s what I see all the time…