Let's talk Dual Track: Continuous Discovery and Delivery

Hey I’m Ant and welcome to my newsletter where you will find practical lessons on building Products, Businesses and being a better Leader

You might have missed these recent posts:

- I asked 40 product leaders ‘What is Discovery?’ Here’s what they said

- Bring it back to the problem

- Escape OKR Theatre

Let’s talk Dual Track: continuous discovery and delivery!

I sat down with Shannon Vettes, CEO/CPO of Usersnap to do a quick round of tips for running discovery and delivery in parallel.

You can watch the full interview here but I thought I’d do a write up of my top takeaways (feels weird to call them takeaways because I was the one talking...let’s call them concepts!)

We even invented a new “concept” coined the ‘T-approach’ to discovery (trademark pending).

Let’s get into them!

1. Your first iteration will suck

It's extremely rare that your first iteration is amazing. This extends to outcomes as well. In my experience it generally takes AT LEAST 3-4 iterations of something to actually drive outcomes.

“I promise you that behind every successful product there are many iterations and prototypes.” - Marty Cagan

If you accept this ‘inconvenient truth’ as Marty Cagan frames it, your focus should shift away from trying to find the ‘perfect’ solution in discovery and instead on reducing the time from insight → impact.

The only way you're going to achieve this is by making discovery and delivery more continuous and in parallel.

2. Big problem = long discovery

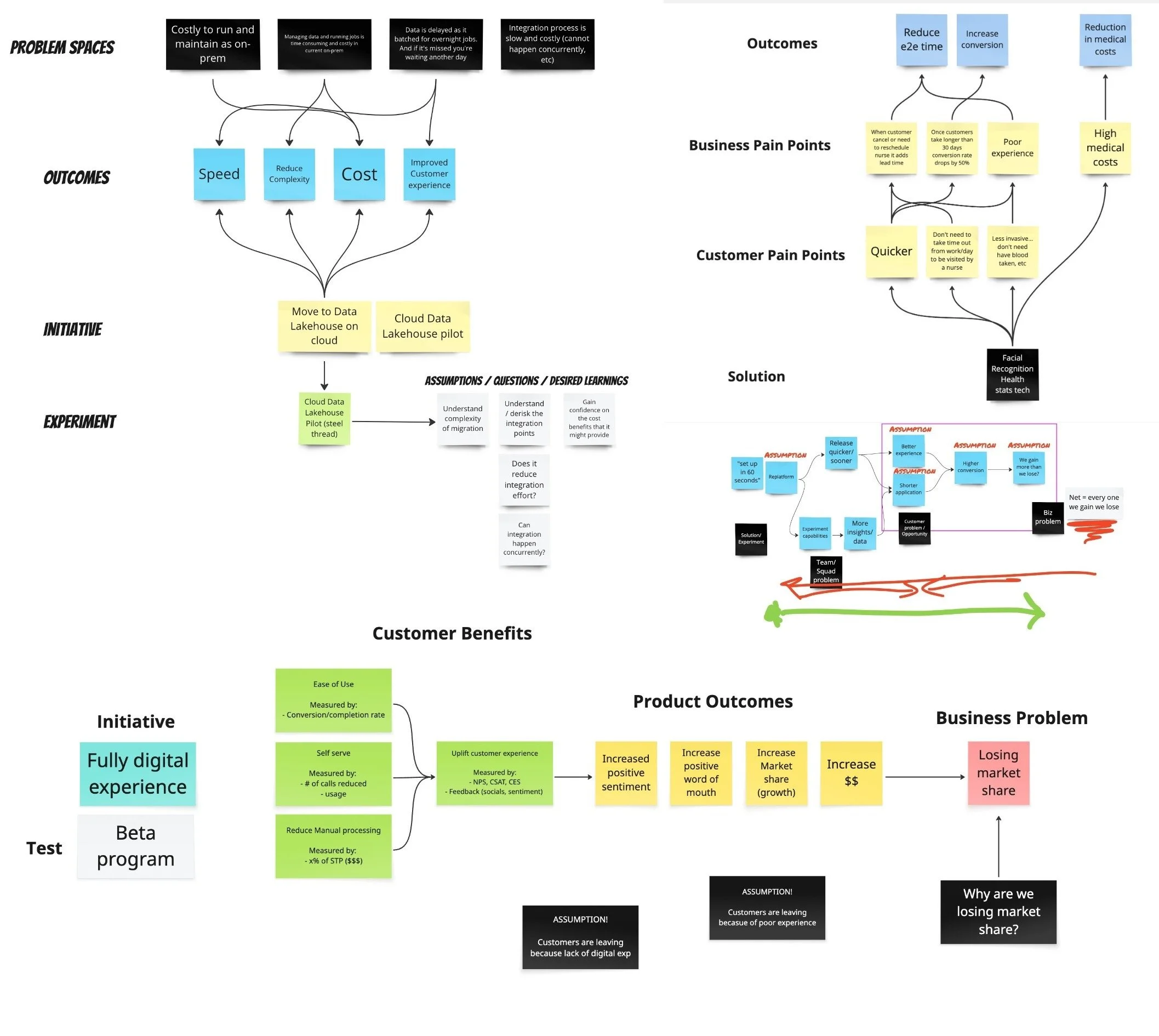

To enable a more continuous approach you can’t be working with large initiatives or big problem spaces.

Unfortunately most teams are ok at breaking down delivery work but they’re not so great at breaking down discovery work.

It’s because breaking down problems is a different skill.

But continuous discovery needs outcomes and problems to be broken down. If the problem you're trying to solve is big and broad or worse, something you can't directly impact, you’re going to spend a long time trying to solve it.

It’s just physics. The broader the problem, the larger the opportunity space, the bigger the solutions = more time.

To make continuous discovery and delivery work you need to break down these larger problem spaces into smaller chunks that you can rapidly discover and deliver a v1 solution for.

3. The T-shaped approach

Ok what do you do when the space IS big and you don’t have the data or information to break the problem down? What then?

This is where the T-shaped approach comes in (trademark pending, along with merch and a book - kidding of course!).

If you are in this position then you might need to do an initial ‘broad-and-shallow’ pass first. Your goal is not to solve the problem but to get enough insights to break it down so you can then focus on solving a more narrow problem.

An example of this would be trying to improve onboarding. First spend a day or two going through the onboarding flow, looking at the analytics, user pain points etc and to identify where in the onboarding flow is creating the biggest problem, so start there!

4. Confidence is your barometer in Discovery

Prioritising opportunities can be hard because when uncertainty is high and you don’t even know how you’re going to solve it yet, it’s hard to quantify things like impact and effort.

As a result, I like to use confidence as my primary guide for decision making:

How confident are we that this is one of the biggest problems?

Are we confident that this is a real problem for our users?

How confident are we in this solution?

Are we confident enough to ship v1?

etc...

This also means that not everything needs discovery. Some things will be well known, small and have low risk. There’s nothing wrong with jumping straight into shipping something or running an AB test or something.

5. Iterations > Perfection

Since you’re using confidence as a guide, you need to accept that things aren’t going to be perfect and you’re going to be wrong sometimes. Remember discovery isn’t a silver bullet.

Aim for iterations over making a perfect decision.

A mentor once said to me; “you’re better off doing 10x iterations of 10 user interviews than 100 interviews in one go”

His point was that things should be emergent.

It’s ok if you don’t understand everything up front. You just need to know enough to start to test and learn.

Hypothetically, say you solved a problem only to learn that it actually wasn’t the biggest problem for your users, let’s say it’s the 3rd biggest. One way to look at it would be regret; “if only we knew…What a waste of time!”. But another way to look at it would be as learning; “awesome, we now know what the biggest problem is, let’s do that next!” And as a bonus, you got something out the door and solved the 3rd biggest problem, that’s great!

6. “Powerful ideas imperfectly measured”

John Cutler said it best; you want to strive for “Powerful ideas imperfectly measured > Perfect measures for not as powerful ideas”.

Teams often get stuck trying to come up with the perfect way to measure something. And that’s not to say you don’t want to invest in trying to come up with good measurements, you absolutely do because bad measurements can give you false positives, lead you down the wrong path or worse, lead to poor decisions. But you also don’t want that to stop you from getting feedback or something out the door.

In the interview with Shannon I used an example from a client. We were going back and forth between different ways to measure a new change which was hard to measure because it didn’t require any user action, meaning there was no way we could just measure ‘clicks’ or anything like that. It was just static. To get around this we added a thumbs up and thumbs down at the bottom with the simple copy “Was this useful? 👍👎”

Sure it’s not the best measurement. And yes we’re likely to get 0.2% response rate. But some feedback is better than none.

(I should note, this isn’t a substitute for user testing and getting more qualitative feedback before proceeding with a solution)

7. Metrics should lead to action

Have a look at your metrics and ask yourself, “what would I do if this went significantly up or down?”

If you can’t answer that question then why are you tracking it?

Metrics should lead to action. They should instigate a decision.

Take the above example. If we started getting a bunch of thumbs down it would be a signal to start to investigate what’s happening. We would probably start looking at some of the screen recordings, interview some users and start to investigate why we’re getting a negative signal for this change so we can fix it.

Try to avoid tracking things for the sake of it, especially if you read somewhere that “all SaaS companies must track these 7 metrics”. The real question is what are your hypotheses, what are you trying to learn and find metrics to track that!

8. Separate your Discovery work from Delivery work

If you want to make continuous discovery and delivery work then you’ll likely need to separate discovery work from delivery.

What does this look like?

Practically it’s having an Opportunity backlog and a Delivery backlog.

Your Opportunity backlog should be a prioritised list of opportunities that you’re continuously identifying. As you ship more things, get more feedback and learn you’ll identify a bunch of new opportunities and those opportunities need to go somewhere.

This is distinctly different from delivery work (features, bugs, etc).

Opportunities need discovery because for the most part you probably have no idea how you’re going to solve them.

So the flow starts to look like this:

Identify Opportunities → Add to Opportunity Backlog → Prioritise Opportunity Backlog → Discovery on the highest priority Opportunity → Solution(s) added to Delivery backlog → Prioritise Delivery backlog → Build, Test and Release → Measure and Repeat.

9. Getting started with Continuous Discovery and Delivery

If you’re just starting out with discovery an easy place to get started is to incorporate discovery into your existing cadence.

For example, if you’re already working in 2 week sprints, add discovery into that process. Which means during Sprint Planning when you plan the sprint. Don’t just look at the work that you plan to deliver but also plan out what you intend to discover. And then track those in your daily scrums as you would with the delivery work and ideally add it to your sprint reviews at the end of the sprint as well.

And don’t worry about fancy tools, frameworks, etc.

Just ask yourself; what do we need to learn/know about this opportunity to increase confidence?

And go do those things.

Of course ideally those actions will involve speaking to users but even just starting there is a great spot.

When I work with product teams to help them get started with continuous discovery and delivery, my focus is always progress over perfection. You need to build a base first before we can start to add more complex techniques, otherwise there’s no way for you to put those techniques into practice. Which is where training hits a wall and where mentoring/coaching comes in.

#shamelessselfplug FYI that’s what I do day-to-day. I work with companies (leaders and product teams) to improve their product practices like continuous discovery and delivery. And I also work with individuals (including 1-on-1) through my Product Mentorship.

So if you feel like you don’t need another training course but instead someone to help you put things into practice, then hit me up!

P.s. this wasn’t a planted ad, I’m actually at capacity for clients until October (spaces are still available in the Product Mentorship though) so I’m not fishing for clients but thought I’d mention it because I know some of you probably have no idea what I do!

And I create all this content so you don’t have to hire me!

Like this video on Product Discovery, where in the second half I walk through an easy process to get started.

Hope that helps!

As usual, if you got value from this someone else will too, forward it to a colleague. Share the love!

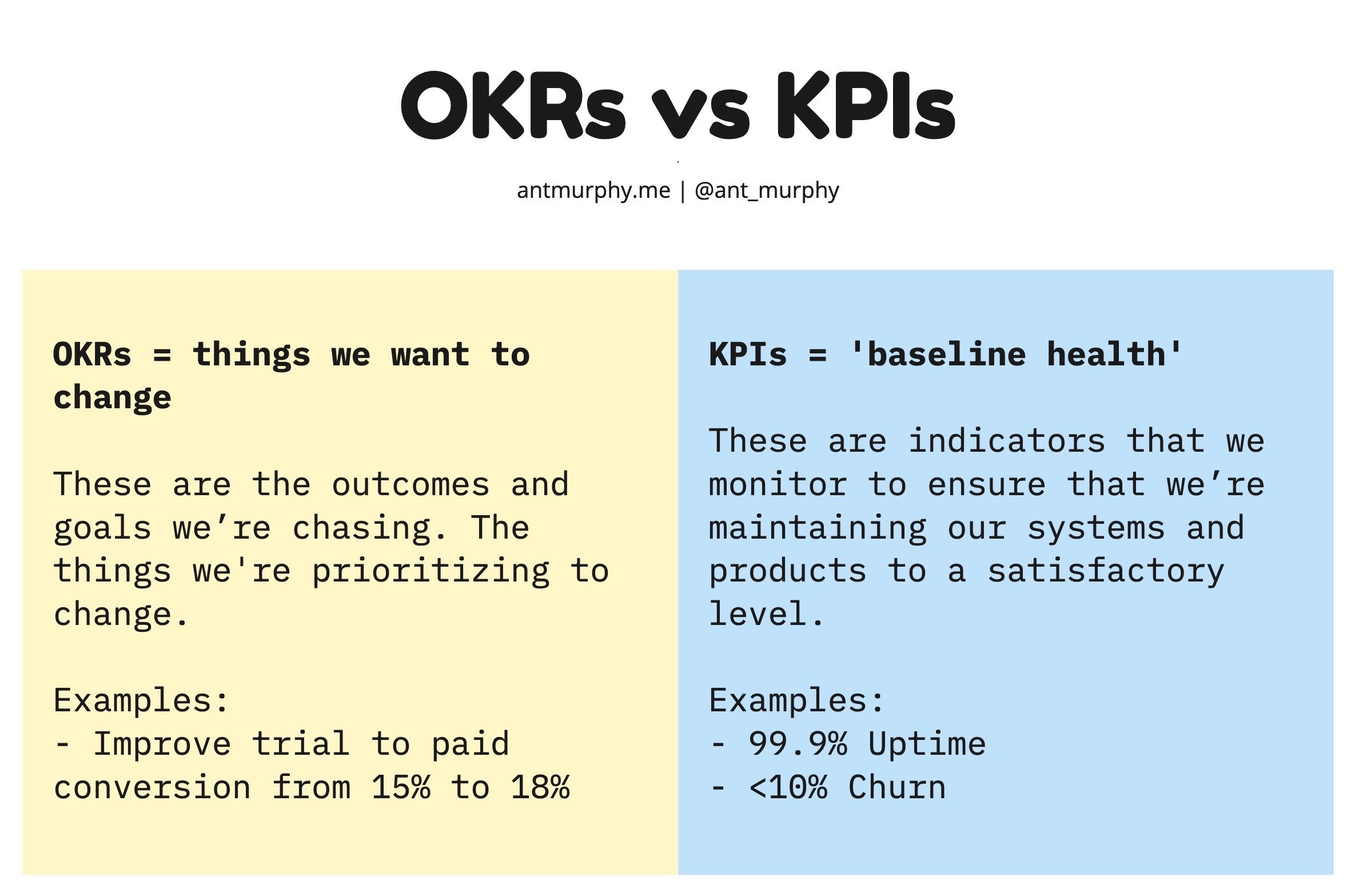

Your OKRs don’t live in a vacuum.

Yet this is exactly how I see many organizations treat their OKRs.

They jump on the bandwagon and create OKRs void of any context.

Here’s what I see all the time…